In this article, we will be discussing the different hash-based data structures such as HashTable, HashMap, and Concurrent HashMap implementation in Java in depth. We will discuss their advantages, disadvantages, differences between them and different use cases of it.

What is Hashing

Hashing is a technique used for storing and fast retrieval of a given value. This technique uses a hash function to convert any arbitrary value(hash) into a fixed-size value(index). As we know, in Java we have a native method hashcode() which can be used to generate an arbitrary value. This arbitrary value is then fed into a hash function to generate a fixed-size value. For simplicity, we can assume it to be a modulus operator.

As the hash value is relatively very large and it's practically not possible to create an array of that size as an Array requires a contiguous memory location. Hence, the hash function is used to generate a fixed-size value. Since it is of fixed size there are chances of collision and too much collision impacts the overall performance of our system.

Hence, a good hash function should map each possible key to a unique slot index.

But it is practically impossible to create such a hash function hence the collision becomes unavoidable but we can definitely minimize the collision.

Collision is the condition where two different arbitrary value gets converted to the same index. In this case, we can use different collision resolution techniques to find alternate index.

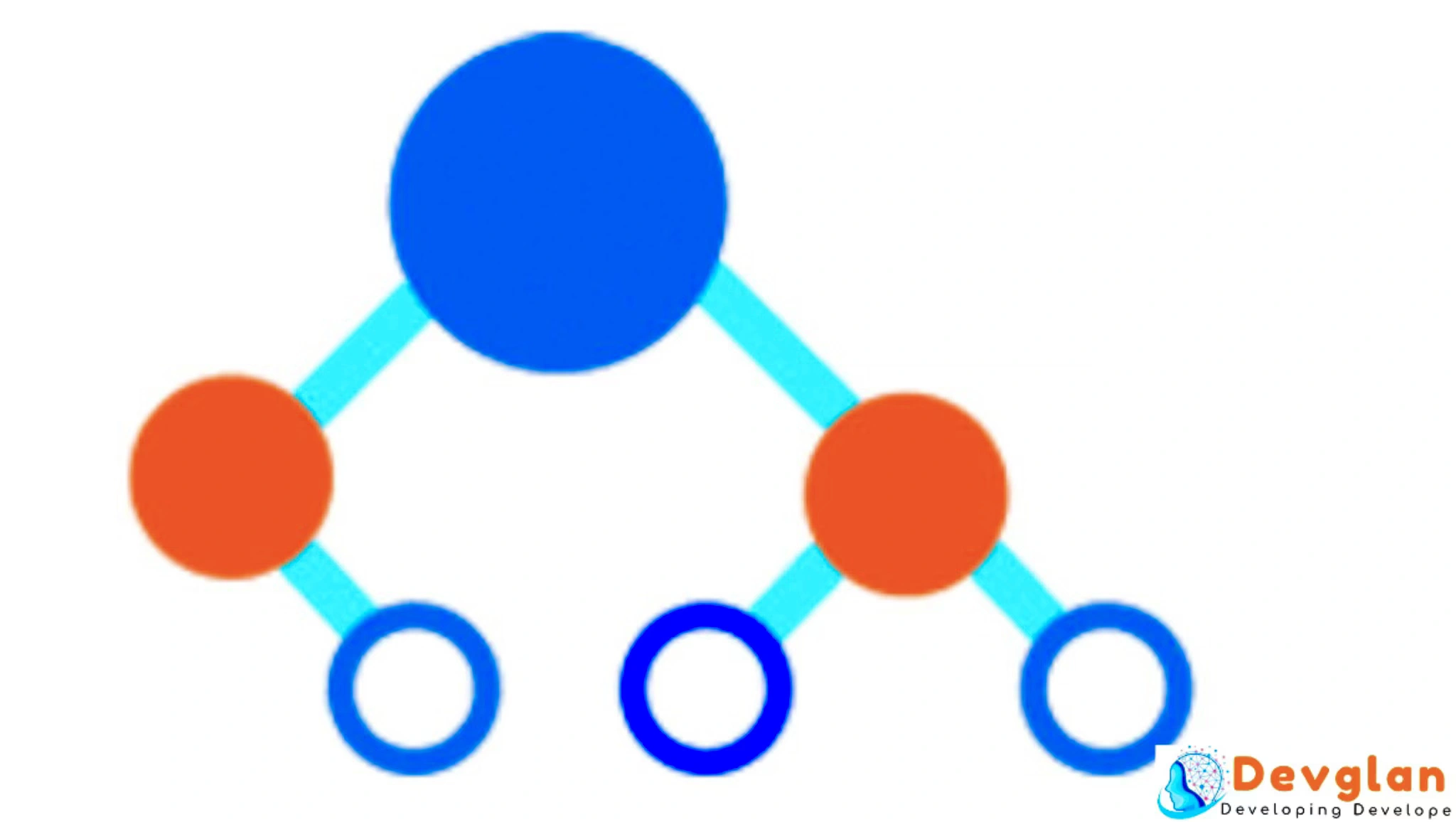

Most popular collision resolution techniques are open addressing and direct chaining. Java uses direct(separate) chaining and in the separate chaining when two or more records hash to the same index, then these records are constituted into a singly-linked list known as chain.

Since Java 8, the collision case is handled differently. When the no. of entry object in a bucket grows beyond a certain threshold(8) known as TREEIFY_THRESHOLD, the content of that bucket switches from using a LinkedList to a Red-Black Tree.

So far, we discussed few generic and common points that a hash-based data structure is based on. In Java, the very basic but widely used hash-based implementation is HashMap.

HashMap in Java

HashMap is an implementation of the Map interface that provides storage for key-value pairs. It internally uses the Hashing technique to find the exact bucket number and hence can provide constant-time performance for the basic operations such as to get and put.

It does not allow any duplicate key and allows only one null key. If the key is null then that element will be stored in a zero location in Entry array.

We have already discussed the HashMap internal implementation in my last article here in detail. Hence, let us directly see the code for its major operations and move forward.

HashMap Entrypublic class Entry<K, V> { private K key; private V value; private Entry<K, V> next; public Entry(K key, V value, Entry<K, V> next){ this.key = key; this.value = value; this.next = next; } }

HashMap is basically an array of entry object.

private int capacity = 16; private Entry<K, V>[] table; public CustomHashMap(){ table = new Entry[capacity]; } public CustomHashMap(int capacity){ this.capacity = capacity; table = new Entry[capacity]; } }

Below is the put and get operation.

public void put(K key, V value){ int index = index(key); Entry newEntry = new Entry(key, value, null); if(table[index] == null){ table[index] = newEntry; }else { Entry<K, V> previousNode = null; Entry<K, V> currentNode = table[index]; while(currentNode != null){ if(currentNode.getKey().equals(key)){ currentNode.setValue(value); break; } previousNode = currentNode; currentNode = currentNode.getNext(); } if(previousNode != null) previousNode.setNext(newEntry); } }

public V get(K key){ V value = null; int index = index(key); Entry<K, V> entry = table[index]; while (entry != null){ if(entry.getKey().equals(key)) { value = entry.getValue(); break; } entry = entry.getNext(); } return value; }

From a performance perspective, HashMap provides the best performance among all hash-based DS implementation but does not guarantee thread safety. Hence, this implementation can't be used in a multithreaded environment as HashMap is not synchronized.

But we can use Collections.synchronizedMap(HashMap) to provide method-level synchronization in case of a HashMap. This is very similar to HashTable with a difference of how synchronization is achieved in both of the DS.

Synchronized map has all its methods in a Synchronized block with a mutex where we can pass another object of the mutex which is optional as an argument by which the lock on the Map methods would be only on that Object. By default, if we do not pass this optional mutex object then this is used as a mutex.

HashTable

Hashtable is a synchronized implementation of Map. It is thread-safe and can be shared with many threads. It does not allow any null key or value.

HashTable implementation is such that all the methods of Hashtable are synchronized and hence there are performance implications with it.

public class Hashtable<K,V> extends Dictionary<K,V> implements Map<K,V>, Cloneable, java.io.Serializable { public synchronized V put(K key, V value) { ... } public synchronized V get(Object key) { ... } public synchronized boolean containsKey(Object key) { ... } public boolean containsValue(Object value) { ... } ... }

Also, the operations on hashtable may not be synchronized and we may have to add extra synchronization block to achieve it completely.

if(!hashtable.contains(key)){

hashtable.put(key,value);

}

If Thread t1 access the hashtable and check for key and at the same time Thread t2 checks for the key before t1 executes the put method then the two threads are inside the if block then there is a possibility of having a race condition when calling multiple methods outside of a synchronized block. Hence, the synchronized block is necessary.

synchronized { if(!hashtable.contains(key)){ hashtable.put(key,value); } }

Hence, concurrent hashmap seems to be a good choice in terms of thread safety among all the different Maps and performance is also guaranteed in a multi-threaded environment as it does not locks the complete Map rather it divides the map into segments and locking is done on these segments and the lock is acquired only during put operation on a particular segment but not on the get operation.

ConcurrentHashMap

As we discussed above, a hashMap is an array of Entry object and this Entry object is a linked list internally up to a TREEIFY_THRESHOLD. Now the concurrent HashMap is an array of Segments and each segment internally is an array of Entry objects. Again this entry object is a linked list node.

Now coming to concurrency, the lock is applied to a segment level and its a reentrant lock. Each segment has its own independent lock and this lock is applied at a segment level during the write operation. The default size of segment is 16 and hence there are 16 locks working independently.

To perform any operation on ConcurrenthashMap, first, we need to find the segment and then the bucket number inside that segment.

Conclusion

In this article, we discussed 3 different hash-based data structure in Java and looked into its internal implementations.